An introduction to using native code with JavaScript with either NodeJS N-API or WebAssembly and the performance gains you might expect.

After previously selecting JavaScript for my blog projects, I came across an interesting presentation on YouTube regarding JavaScript performance. In the video, a developer working on the Google V8 JavaScript engine provided insight into Javascript performance with native code. The talk demonstrated calculating the first million primes using programs written in JavaScript, C++ and WebAssembly. Overall it was an interesting talk illustrating the performance gains that JavaScript has achieved.

A Question

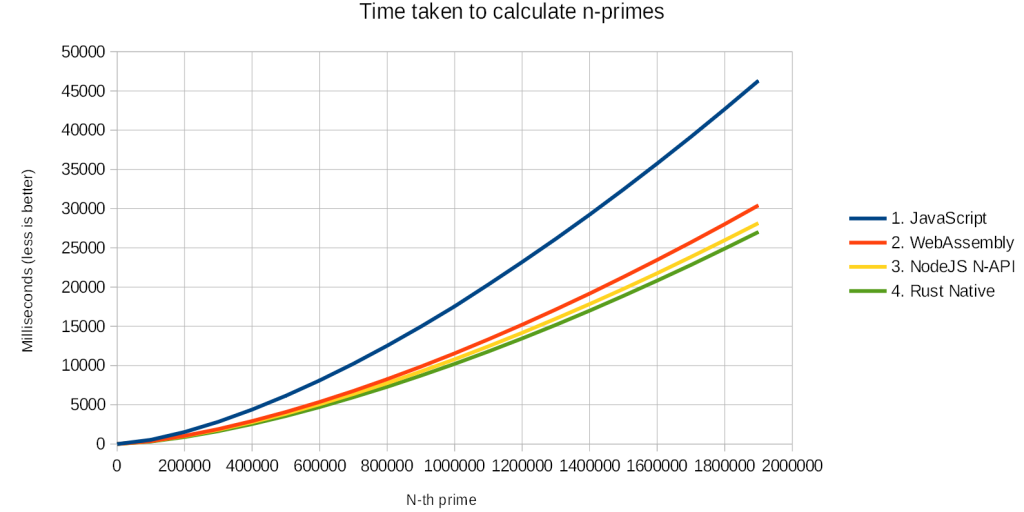

Towards the end of the video, a graph showed the relative performance between JavaScript, C++ and WebAssembly when finding the n-th prime number. Admittedly this is a theoretical example, but one whose results are consistent with my own experiences. In practice, JavaScript’s performance is generally good enough for most tasks.

The benchmarks tested each of the languages discretely in turn, one at a time.

How would the performance be affected if we used JavaScript and replaced only the prime test function with C++ or WebAssembly?

In other words, if we attempted to speed-up JavaScript by replacing the prime test function with native code.

Background

Writing time-critical functions with code from a faster language has a long history in programming, representing an attempt to get the best of both worlds. The developer will write the program in a slower more expressive language, only using the faster lower level one where performance is an issue. If used carefully, the technique can help reduce the development time and produce more maintainable code, while improving the overall performance.

The same should apply to JavaScript, however, the small number of times that I have looked for faster native NPM modules, the performance was mixed. Sometimes I have seen speed improvements and other times not. Was this just a case of a poorly written module? Or was there something else at play? I did not look further into this inconsistency as JavaScript was generally good enough.

So seeing this video provided food for thought. Let’s investigate.

Finding the Nth prime

The first task is to replicate the benchmark from the video in JavaScript under NodeJS. To find the nth prime it is a case of trying all integers from 2 up to the square root of the number we are checking and look for a number that divides cleanly. The numbers 0 & 1 are known special cases. Here is the base JavaScript version of the function to determine if a number is prime.

function isPrimeJS(n) {

if(n <= 1) {

return false;

}

var j = 2;

while(j * j <= n) {

if (n % j === 0)

return false;

j++;

}

return true;

}

We also multiply j together to avoid calling a square root function, debatable whether that’s worthwhile or not. In any event, this should be good enough to use as the core of our comparison.

The test

I used the prime-number problem from the original video for this test. However, I doubled the number of primes searched to allow for any shortcomings of my setup and conducted only 20 discrete timings so as not to skew the results. Furthermore, I limited the tests to 32-bit integers to provide a consistent result. I ran the tests using the 64-bit version of NodeJS under Windows 10.

Native version

With the JavaScript prime test working as expected, it was time to put together the native version. This was a case of moving over to Visual Studio and constructing the version in C++. While this worked, it would be good if the code ran across platforms and avoided using windows specific system calls. As a possible approach, I developed a solution in Rust. For this test at least, its performance was indistinguishable from C++. Hence, I decided to employ Rust for the pure native code test. Details on how to install Rust can be found on the official site.

NodeJS N-API version

The N-API is a relatively recent addition to the Node ecosystem, providing a stable interface for calling native C/C++ functions from within NodeJS. The interface is provided by Node itself and offers separation from the underlying V-8 engine. Despite this, some boilerplate code is required to construct the C++ functions as can be seen below. Further information regarding N-API can be found in the official documentation.

#include <node_api.h>

// Prime function

napi_value IsPrime32(napi_env env, napi_callback_info info) {

int n = 0;

size_t argc = 1;

napi_value argv[1];

napi_status status;

status = napi_get_cb_info(env, info, &argc, argv, NULL, NULL);

if(status != napi_ok) {

napi_throw_error(env, NULL, "Problem parsing arguments");

}

status = napi_get_value_int32(env, argv[0], &n);

if (status != napi_ok) {

napi_throw_error(env, NULL, "Invalid argument");

}

int isPrime = 1;

if (n <= 1) {

isPrime = 0;

} else {

for (int j = 2; (j * j) <= n; j++) {

if ((n % j) == 0) {

isPrime = 0;

break;

}

}

}

napi_value result;

status = napi_create_int32(env, isPrime, &result);

if (status != napi_ok) {

napi_throw_error(env, NULL, "value");

}

return result;

}

// Initialisation function

napi_value Init(napi_env env, napi_value exports) {

napi_value fn;

napi_status status;

status = napi_create_function(env, NULL, 0, IsPrime32, NULL, &fn);

if(status != napi_ok) {

napi_throw_error(env, NULL, "Wrapping native function");

}

status = napi_set_named_property(env,exports,"isPrime32", fn);

if(status != napi_ok) {

napi_throw_error(env, NULL, "Populating exports");

}

return exports;

}

NAPI_MODULE(NODE_GYP_MODULE_NAME, Init)

The node-gyp tool is used to build the add-on for calling from within JavaScript. At the very least a binding file is required for this.

{

"targets": [

{

"target_name": "prime",

"sources": [ "./prime.cc" ]

}

]

}

To build the addon, we must go to the command line and locate the root of the project. After the gyp & API installation, use run build to build the addon.

$ npm install node-gyp --save-dev $ npm install node-addon-api $ npm run build

Once built, it is then possible to run the addon from the NodeJS code with only a couple of lines.

const addon = require('./build/Release/prime');

addon.isPrime32(19); // Returns 1

WebAssembly

WebAssembly offers the possibility of running native code on both the server and within the browser. Many people consider this to be the future path for greater performance within JavaScript, partly due to the flexibility offered. For the final test, I choose to use Rust as the native language. I found the following guide very helpful in building WebAssembly from Rust. Alternatively, you might read the Rust and WebAssembly book for more up to date information. The function itself required almost no modification from the native version of the tests.

#[no_mangle]

pub extern "C" fn is_prime(n: i32) -> bool {

let mut result = true;

if n <= 1 {

result = false;

};

let mut j: i32 = 2;

while j * j <= n {

if n % j == 0 {

result = false;

break;

}

j += 1;

};

result

}

The code can then be compiled to make the WASM file.

$ rustup target add wasm32-unknown-unknown $ rustc --target wasm32-unknown-unknown -O --crate-type=cdylib prime.rs -o prime.big.wasm

The resulting file was rather large and can be reduced by:

$ cargo install --git https://github.com/alexcrichton/wasm-gc $ wasm-gc prime.big.wasm prime.wasm

It is now possible to use the WebAssembly from within JavaScript. The WASM must be loaded manually, initialised and then called:

function runWebassembly(fileName, fnName, message, done) {

var source = fs.readFileSync(fileName);

const env = {

memoryBase: 0,

tableBase: 0,

memory: new WebAssembly.Memory({

initial: 256

}),

table: new WebAssembly.Table({

initial: 0,

element: 'anyfunc'

})

}

var typedArray = new Uint8Array(source);

WebAssembly.instantiate(typedArray, {

env: env

}).then(result => {

runTest(result.instance.exports[fnName], message);

if(done) {

done();

}

}).catch(e => {

// error caught

console.log(e);

});

}

runWebassembly('./is_prime.wasm', 'is_prime', 'Starting webassembly rust');

It is also possible to use C++ in WebAssembly and for those interested, I suggest this guide.

The Results

All the tests run reasonably quickly on my machine and I was able to produce similar results to the original video. Not exactly the same, but similar enough.

As expected the pure JavaScript was the slowest, the native fastest with the mixed versions just behind. This has very much answered my question. Yes, it is possible to gain a worthwhile speed boost by calling native functions from within JavaScript.

NodeJS N-API vs WebAssembly

The NodeJS N-API provided close to native performance, while WebAssembly was slightly slower. This was not by a lot, but it was certainly noticeable. It is likely to be expected as WebAssembly is a byte code rather than being a true native binary.

If performance was the sole consideration then I would choose the NodeJS N-API. It enjoys the added benefit of being part of NodeJS itself. Getting gyp working on Windows is not always straightforward, but once achieved, NPM will then look after the compilation. However, I must admit that the boilerplate code is an extra layer of work. But this is probably to be expected when interfacing between languages. It’s not that different from working with V8 directly.

WebAssembly was a bit slower than native and required some intermediate work with the WASM, both in the compilation and then loading into the JavaScript. However, it did come with the benefit of writing native code without having to worry much about the interfacing into JavaScript. Furthermore, it is possible to adopt this approach for the browser. This is a significant advantage, though it didn’t feel as polished as the NodeJS N-API.

Source Code

The source code for these tests can be found on Github. These are straightforward tests and should run on any platform for those interested.

Final Thoughts

I have answered the original question posed at the start of this post. After the experiment, I would not hesitate to speed up slower JavaScipt with native code. Furthermore, this extends the problem range for which JavaScript would be considered viable. This does leave me unsure why some native addons are slow, but its probably nothing to do with the process itself. It would appear that the N-API approach offered greater performance, but comes at the cost of flexibility and requires boilerplate interfacing code. WebAssembly is a bit slower but offers a cleaner interface, though it is perhaps a little less polished.

As an aside, this exercise also provided the opportunity to use a little Rust. I was able to convert the C++ program into Rust very easily. This was despite never having touched the language before. It was certainly a credit to Rust and quite refreshing. Overall I came away feeling that it is a comfortable language to program in. Performance-wise I found it to be identical to that of C++.

There are a couple of aspects from these tests I would like to explore in the future:

- How does JavaScipt compare to other common languages such as Python, C# or Lua? Some of these allow for native code or include performant JIT compilers.

- How does the V8 engine’s optimising compiler impact optimising JavaScript code? For example, are there occasions when a simple but slower algorithm is faster due to the underlying optimisations from the engine.

Updated Nov 2023

I included a new header image.

Notice

The header image was AI generated by Microsoft Designer. All other photos & diagrams that appear on this blog are my own, unless otherwise cited.